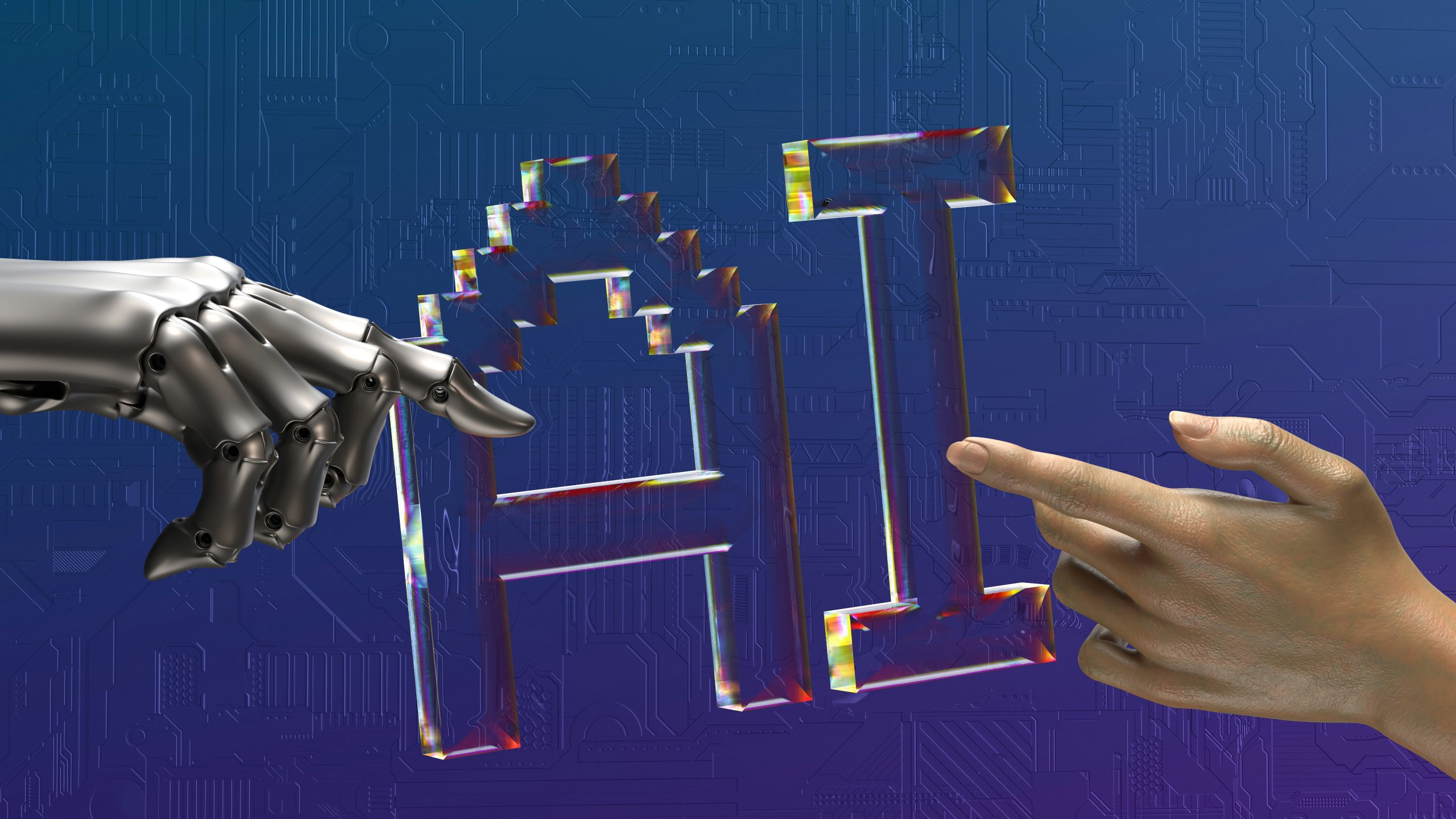

Benchmark Graphics Cards for Gaming and AI

Choosing the appropriate graphics card in 2025 is no easy task—whether you’re a gamer in pursuit of silky-smooth 4K frames or an AI aficionado testing computationally intensive models, you require hard facts to make your decision. Benchmarking graphics cards provides you with the unvarnished truth regarding performance, allowing you to sidestep overblown specs and determine the GPU you require. I’ve wasted countless hours testing GPUs for customers, from gaming systems to AI workstations, and let me tell you, a decent benchmark is worth its weight in gold when it saves you time, money, and frustration.

This blog takes you through benchmarking graphics cards for gaming and AI workloads in 2025 with step-by-step actions and tools to achieve consistent results.

Why Benchmark Graphics Cards in 2025?

With GPUs such as Nvidia’s RTX 5090, AMD’s RX 9070 XT, and Intel’s Arc Battlemage releasing this year, 2025 is a huge year for graphics cards. But specs such as CUDA cores or VRAM don’t paint the entire picture—actual GPU performance differs between gaming and AI workloads. Benchmarking quantifies frame rates, processing speeds, and efficiency, which allows you to compare cards such as the RTX 4090 with the RX 7900 XTX. Whether you’re assembling a gaming rig or an AI machine for Stable Diffusion, benchmarking is the way to ensure you’re getting the most bang for your money. How-to time!

Step 1: Know Your Objectives

Before you ever benchmark graphics cards, define your requirements:

Gaming: Concentrate on frame rates (FPS), resolutions (1080p, 1440p, 4K), and the inclusion of features such as ray tracing or DLSS 3. Benchmark games such as Cyberpunk 2077 or Starfield.

AI Workloads: Test throughput for applications such as Stable Diffusion or training PyTorch models. Check for CUDA/ROCm and VRAM size.

Pro Tip: I assisted one client in benchmarking an RTX 4080 for gaming and AI and discovered that it was way too much for 1080p but ideal for AI inference. Identify your use case to select the appropriate tests.

Step 2: Choose the Right Benchmarking Tools

The best utilities to benchmark graphics cards are based on your interest:

For Gaming:

3DMark Time Spy: Checks DirectX 12 performance, best for overall GPU performance. Expensive at ~$35 but provides a free demo.

Unigine Superposition: Checks 4K and VR performance, free for simple tests.

In-Game Benchmarks: Some games like Shadow of the Tomb Raider support in-game benchmarks for real-world FPS.

For AI:

MLPerf Client: Checks AI inference performance, free and open-source.

Stable Diffusion Benchmark: Measures image generation speed, ideal for creative AI tasks.

Blender Benchmark: Evaluates GPU rendering for AI-related 3D tasks, free to use.

Pro Tip: I used 3DMark to benchmark a client’s RX 7600, confirming it handled 1440p gaming well. Download tools from official sites like UL.com or Unigine.com.

Step 3: Set Up Your Test Environment

A clean setup ensures accurate GPU performance results:

Update Drivers: Get the latest Nvidia, AMD, or Intel drivers from their websites. Old drivers can affect results by 10-20%.

Close Background Apps: Close apps such as browsers or Discord to release CPU and RAM.

Stable Hardware: Use a new CPU (such as Ryzen 7 9800X3D) and a minimum of 16GB RAM to eliminate bottlenecks. Test on a fresh Windows 11 install for consistency.

Pro Tip: I once received inconsistent benchmarks because of a background app consuming resources—closing it resolved the issue. Keep an eye on system use with Task Manager.

Step 4: Benchmark Graphics Cards for Gaming

Here’s how to benchmark graphics cards for gaming:

Install 3DMark: Download from Steam or UL.com, then run Time Spy or Fire Strike for DirectX 12/11 tests.

Run Tests: Choose your resolution (e.g., 1440p) and settings (e.g., Ultra with ray tracing). Run several tests for consistency.

Record FPS: Record average FPS and 1% low FPS (for smoothness). For instance, the RTX 5090 reaches ~100 FPS at 4K in Cyberpunk 2077 with DLSS.

Compare Results: Compare your card with others using Tom’s Hardware GPU hierarchy or GPUCheck.com.

Pro Tip: One client’s benchmark of an RTX 4070 Ti yielded 80 FPS at 1440p, determining their upgrade. Run all tests 3 times to smooth out anomalies.

Step 5: Benchmark Graphics Cards for AI Workloads

AI workloads require different metrics:

Set Up MLPerf: Download MLPerf Client from GitHub and set up for your GPU (Nvidia’s CUDA or AMD’s ROCm).

Test Stable Diffusion: Utilize Automatic1111’s Stable Diffusion UI to test image generation time (e.g., seconds per 512×512 image). The RTX 4090 requires ~1.5s per image.

Run Blender: Download Blender Benchmark and test rendering performance on AI-related 3D workloads.

Analyze Throughput: Pay attention to samples per second (e.g., images or tokens handled). Nvidia’s RTX 50-series excels at AI workloads, according to Tom’s Hardware.

Pro Tip: I tested an RTX A5000 for a client, verifying it supported big AI models due to 24GB VRAM. Log results in a spreadsheet for comparison.

Step 6: Measure Power and Thermal Performance

GPU performance isn’t solely about speed—efficiency counts:

Power Draw: Monitor wattage with HWMonitor. For instance, the RTX 5090 draws an average of 400W under load.

Temperature: Monitor temps using MSI Afterburner. Maintain GPUs at below 85°C for gaming and 90°C for AI to prevent throttling.

Pro Tip: One client’s RX 6800 XT operated hot at 90°C on AI workload, so we enhanced case airflow, reducing temps by 10°C. Monitor temps on each benchmark run.

Step 7: Analyze and Compare Results

Transform raw data into meaningful insights:

Gaming: Compare the FPS across the resolutions and the games. If the RTX 5060 is able to reach 60 FPS at 1440p but the RX 9060 XT reaches 70 FPS, the latter’s the better one for gaming.

AI: Examine the throughput (e.g., images/second). Nvidia’s RTX 50-series is better for Stable Diffusion, while AMD’s RX 9000-series provides value.

Pro Tip: I organized a client’s benchmark scores into a table, and the RTX 4080 performed 20% better for AI compared to the RX 7900 XTX. Use Excel or Google Sheets for readability.

Step 8: Tune Your Setup

Leverage benchmark scores to fine-tune your system:

Overclocking: Utilize MSI Afterburner to increment GPU clocks nominally (e.g., +100MHz) if performance trails, but keep an eye on temps.

Driver Settings: Tweak Nvidia/AMD control panels to performance mode for gaming or compute mode for AI.

Pro Tip: Driver tweaking for a client’s RTX 3060 boosted FPS by 10% in Starfield. Test tweaks on a single game or task first.

Step 9: Keep Up on 2025 Trends

GPU performance changes quickly:

New Releases: Monitor Nvidia’s RTX 50-series Super models or AMD’s RDNA 4 updates, according to PC Gamer.

AI Breakthroughs: Stay updated with MLPerf for fresh AI benchmarks since new frameworks such as PyTorch are emerging.

Pro Tip: I re-benchmarked a customer’s GPU following a driver update, and I saw 5% performance. Update monthly.

Conclusion

Benchmarking GPUs in 2025 is your key to selecting the ideal GPU for gaming or AI. Metrics such as 3DMark, MLPerf, and Blender enable you to take FPS, throughput, and efficiency readings, providing you with simple data to compare cards such as the RTX 5090 or RX 9070 XT. Begin by establishing a clear test environment, launching gaming and AI benchmarks, and reviewing results in order to inform your purchase or optimization. Regardless of whether you’re assembling a 4K gaming rig or an AI workstation, these actions ensure that you receive the best GPU performance.